[AWS] Lambda layers - Fetch VPCs + Terraform Project 08

Package dependencies/libraries using Layers

Table of contents

Inception

Hello everyone, This article is part of The Terraform + AWS series, And it's not depend on any previous articles, I use this series to publish out AWS + Terraform Projects & Knowledge.

Overview

Hello Gurus, AWS Lambda is a compute service that lets you run code without provisioning or managing servers, Lambda runs your code on a high-availability compute infrastructure and performs all of the administration of the compute resources, Enables us to type-down your code that precisely meet your needs regardless of the servers management.

Today's Example will Deploy a Lambda layer version, and lambda that holds Python code fetching existing VPCs Details.

Building-up Steps

Today's will Build up a Lambda layer, and Lambda Python code that fetch existing VPCs details using boto3 library provided by AWS, The Infrastructure will build-up Using 𝑻𝒆𝒓𝒓𝒂𝒇𝒐𝒓𝒎.✨

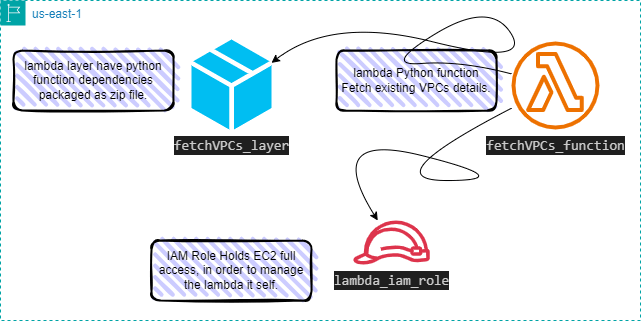

The Architecture Design Diagram:

building-up steps Details:

Create a python script fetching existing VPCs using boto3 library.

Create a Bash script that packaging code dependencies as zip file.

Deploy bash script from Terraform code using null_resource.

Deploy a Iambda layer holds the created zip package.

Deploy an IAM Role holds EC2 full access in order to mange the lambda it self.

Deploy a lambda function holds

Created Python script.

Deployed layer package.

Deployed IAM Role.

enough talking, let's go forward...😉

Clone The Project Code

Create a clone to your local device as the following:

pushd ~ # Change Directory

git clone https://github.com/Mohamed-Eleraki/terraform.git

pushd ~/terraform/AWS_Demo/15-lambda_layers

- open in a VS Code, or any editor you like

code . # open the current path into VS Code.

Terraform Resources + Code Steps

Once you opened the code into your editor, will notice that the resources have been created. However will discover together how Create them steps by step.

Create Python script

Create a Python script fetch the existing VPCs details as the following code

- Create a new file under

scriptscalledfetch_vpcs.py

.tf files is a good way for less code complexity.import boto3

def lambda_handler(event, context):

ec_client = boto3.client('ec2', region_name="us-east-1")

all_available_vpcs = ec_client.describe_vpcs()

vpcs = all_available_vpcs["Vpcs"]

try:

# looping

for vpc in vpcs:

vpc_id = vpc["VpcId"]

cidr_block = vpc["CidrBlock"]

state = vpc["State"]

print(f"VPC ID: {vpc_id} with {cidr_block} state = {state}")

except Exception as error:

print(f"Error occurred: {error}")

Create Bash script

Create a Bash script that packaging code dependencies as zip file.

- Create a bash script under

scriptscalledcreate_layer.sh

#!/bin/bash

# Create a Directory holds all function dependencies

mkdir scripts/fetchVPCs_dependencies; cd scripts/fetchVPCs_dependencies

# Create a virtual environment with python3.11

python3.11 -m venv fetchVPCs_depens_env

# Activate the virtual environment

source ./fetchVPCs_depens_env/bin/activate

# Install boto3 library inside the virtual environment - install all the libraries here

pip install boto3

# Deactivate the virtual environment

deactivate

# Navigate to the site-packages directory within the virtual environment

cd fetchVPCs_depens_env/lib/python3.11/site-packages

# Create a zip file named my_deployment_package.zip containing the current directory content

zip -r ../../../../../fetchVPCs_depens_packages.zip .

Configure the provider

Start building Terrafrom resources

Create a new file called

configureProvider.tfConfigure the provider as the below code

# Configure aws provider

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0"

}

}

}

# Configure aws provider

provider "aws" {

region = "us-east-1"

profile = "eraki" # Remove this line if you configured

# your AWSCLI as Default

}

Deploy Lambda Resources

- Create a new file called

lambda.tfand Deploy resources as the below steps

.tf files is a good way for less code complexity.Create dependencies package layer

# run the bash script to install and package and all dependencies

resource "null_resource" "create_fetchVPCs_layer" {

provisioner "local-exec" {

command = "sh scripts/create_layer.sh"

}

}

Deploy lambda layer

# Deploy lambda layer resource

resource "aws_lambda_layer_version" "fetchVPCs_layer" {

# Delay untill dependencies packages are available

depends_on = [ null_resource.create_fetchVPCs_layer ]

# mention file path of created package

filename = "${path.module}/scripts/fetchVPCs_depens_packages.zip"

layer_name = "fetchVPCs_layer"

compatible_runtimes = ["python3.12", "python3.11"]

}

Deploy IAM Role

# Create an IAM role of lambda function

resource "aws_iam_role" "lambda_iam_role" {

name = "lambda_iam_role"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = "sts:AssumeRole"

Principal = {

Service = "lambda.amazonaws.com"

}

Effect = "Allow"

Sid = "111"

},

]

})

managed_policy_arns = [

# Utilizing ec2 full access AWS managed policy in order to have access on managing lambda it self

"arn:aws:iam::aws:policy/AmazonEC2FullAccess"

]

}

Deploy Lambda Fucntion

# Deploy lambda function

resource "aws_lambda_function" "fetchVPCs_function" {

function_name = "fetch_vpcs_Function"

# zip file path holds python script

filename = "${path.module}/scripts/fetch_vpcs.zip"

source_code_hash = filebase64sha256("${path.module}/scripts/fetch_vpcs.zip")

# handler name = file_name.python_function_name

handler = "fetch_vpcs.lambda_handler"

runtime = "python3.12"

timeout = 120

# utilizing deployed Role

role = aws_iam_role.lambda_iam_role.arn

# utilizing deployed layer

layers = [aws_lambda_layer_version.fetchVPCs_layer.arn]

}

Apply Terraform Code

After configured your Terraform Code, It's The exciting time to apply the code and just view it become to Real. 😍

- First the First, Let's make our code cleaner by:

terraform fmt

- Plan is always a good practice (Or even just apply 😁)

terraform plan

- Let's apply, If there's No Errors appear and you're agree with the build resources

terraform apply -auto-approve

Check & invoke lambda

After code applied successfully it's time to check the run result of the function, let's begin

Open-up lambda console, and open your function.

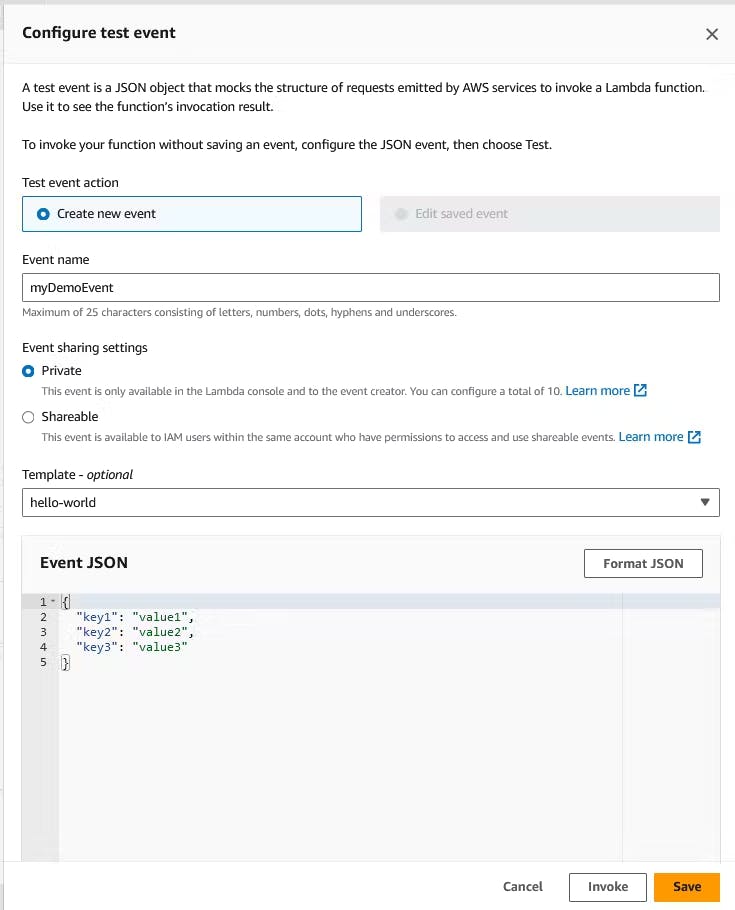

Scroll down to code source section and press on Test

Type any event name , Then save, And press Test again.

The output should be like the below.

Destroy environment

The Destroy using terraform is very simple, However we should first destroy the Access keys.

- Destroy all resources using terraform command

terraform destroy -auto-approve

That's it, Very straightforward, very fast🚀. Hope this article inspired you and will appreciate your feedback. Thank you.