[AWS] S3Bucket - MFT + Terraform Project 04

Manage file Transfer to/from S3Bucket (Bucket Policy)

Inception

Hello everyone, This article is part of The Terraform + AWS series, The Examples in this series is built in sequence, I use this series to publish out Projects & Knowledge.

Overview

Hello Gurus, 𝑴𝒂𝒏𝒂𝒈𝒆 𝑭𝒊𝒍𝒆 𝑻𝒓𝒂𝒏𝒔𝒇𝒆𝒓, Streamlining Data Flows with Security and Reliability, In today's data-driven world, businesses and organizations frequently need to move files reliably between systems, partners, and Organizations. Managed file transfer (MFT) solutions offer a way to streamline these processes. However, we going to provide our own solution here using 𝑺3𝑩𝒖𝒌𝒄𝒆𝒕, 𝑺3𝑩𝒖𝒌𝒄𝒆𝒕 policy, 𝒔3𝒂𝒑𝒊 𝒄𝒐𝒎𝒎𝒂𝒏𝒅, 𝒂𝒏𝒅 𝑷𝒚𝒕𝒉𝒐𝒏.

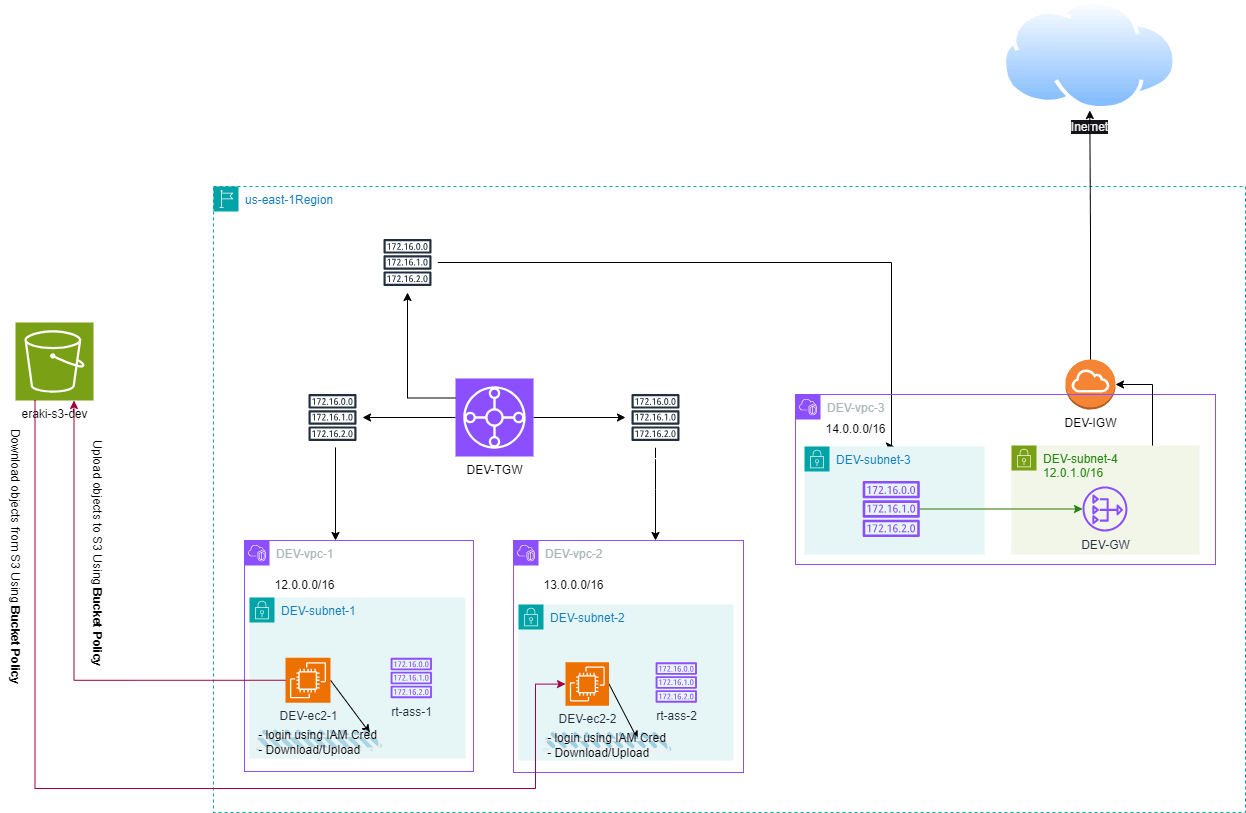

In the previous article, we discovered how to send and receive file to/from S3 to/from EC2 machines Via VPC endpoint.

However, Today's Example will use S3-Bukcet as a centralize object storage between EC2's, to send and receive files automatically Via Bucket policy.

Building-up Steps

We'll complete on the last article, At The Last Article we build up together an S3 Bucket and link it to the private VPC's using VPC endpoint in order allow EC2 machines to send and receive files.

Therefore Today will build-up together the Same resources, like an S3 Bucket, etc. However will be using S3 Bucket policy in order allow IAM user to send and receive files into the EC2 machines, Using 𝑻𝒆𝒓𝒓𝒂𝒇𝒐𝒓𝒎.✨

The Architecture Design Diagram:

building-up steps Details:

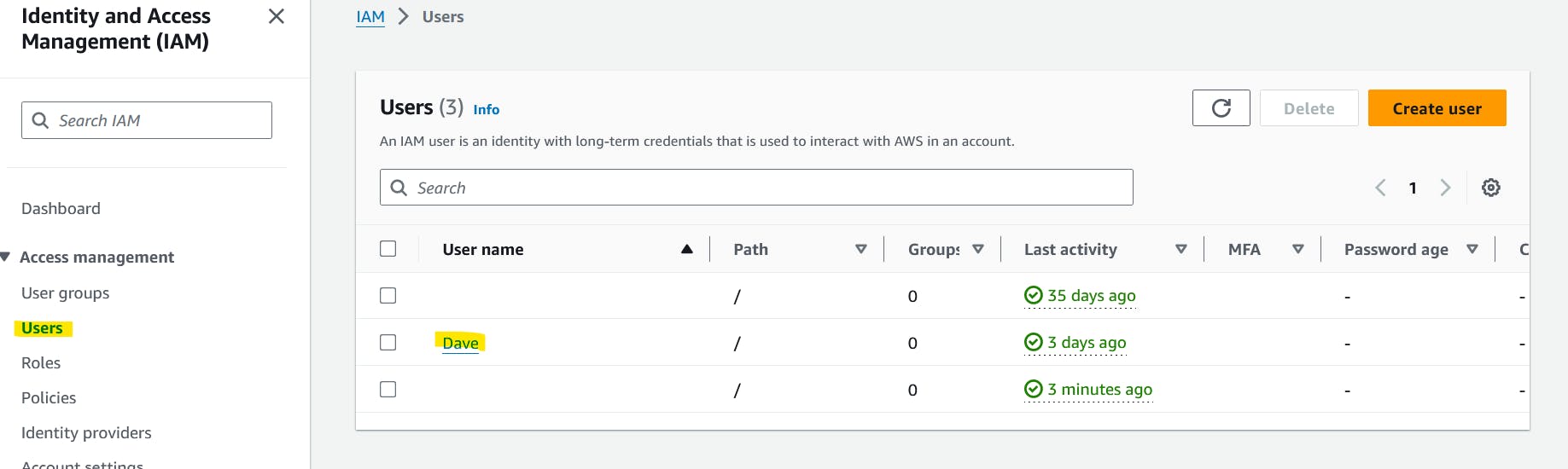

Create IAM user "Dave"

Create an S3 Bucket.

Create a Bucket policy for IAM User "Dave"

enough talking, let's go forward...😉

Clone The Project Code

Create a clone to your local device as the following:

pushd ~ # Change Directory

git clone https://github.com/Mohamed-Eleraki/terraform.git

pushd ~/terraform/AWS_Demo/07-S3BucketPolicy01

- open in a VS Code, or any editor you like

code . # open the current path into VS Code.

Terraform Resources + Code Steps

once you opened the code into your editor, will notice that the resources have been created. However will discover together how Create them steps by step.

Create an S3 Bukcet

- Create a new file called

s3.tf

- Create The S3 Bucket Resources and S3 Bucket policy as the below

# Create S3 Bucket

resource "aws_s3_bucket" "s3-01" {

bucket = "eraki-s3-dev-01"

force_destroy = true # force destroy even if the bucket not empty

object_lock_enabled = false

tags = {

Name = "eraki-s3-dev-01-Tag"

Environment = "Dev"

}

}

# Block public access

resource "aws_s3_bucket_public_access_block" "s3-01-dis-pubacc" {

bucket = aws_s3_bucket.s3-01.id

block_public_acls = true

block_public_policy = true

ignore_public_acls = true

restrict_public_buckets = true

}

# Assign Bucket policy

resource "aws_s3_bucket_policy" "s3-01-policy" {

bucket = aws_s3_bucket.s3-01.id

policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "statement1",

"Effect": "Allow",

"Principal": {

"AWS": "${aws_iam_user.user-Dave.arn}"

},

"Action": [

"s3:GetBucketLocation",

"s3:ListBucket"

],

"Resource": [

"${aws_s3_bucket.s3-01.arn}"

]

},

{

"Sid": "statement2",

"Effect": "Allow",

"Principal": {

"AWS": "${aws_iam_user.user-Dave.arn}"

},

"Action": [

"s3:GetObject",

"s3:PutObject"

],

"Resource": [

"${aws_s3_bucket.s3-01.arn}/*"

]

}

]

}

EOF

}

Create IAM User

Create a new file called

IAMuser.tfCreate The IAM user Resource as the below

resource "aws_iam_user" "user-Dave" {

name = "Dave"

}

Apply Terraform Code

After configured your Terraform Code, It's The exciting time to apply the code and just view it become to Real. 😍

- First the First, Let's make our code cleaner by:

terraform fmt

- Plan is always a good practice (Or even just apply 😁)

terraform plan -var-file="terraform-dev.tfvars"

- Let's apply, If there's No Errors appear and you're agree with the build resources

terraform apply -var-file="terraform-dev.tfvars" -auto-approve

Check S3 File Steaming

Export Access key

- Open-up the AWS IAM Console, Then specify Dave user

- Under Security Credentials tab, Create access key for CLI, and download The CSV file.

Login into EC2 machines

login into ec2 machines via AWS console using VPC endpoint as mention here.

Configure AWSCLI Credentials

Configure AWSCLI Credentials for both ec2 machines, as the Following:

aws configure --profile Dave

# past the value from the CSV file

# region us-east-1

# output json

using s3apicommand

By using s3api commands, you can manage your S3 storage programmatically through the AWS CLI. This offers greater automation and control compared to using the S3 console interface.

Check our configuration integration.

Will upload a Dummy file from ec2-1 to the S3 Bucket, Then Download the file to ec2-2.

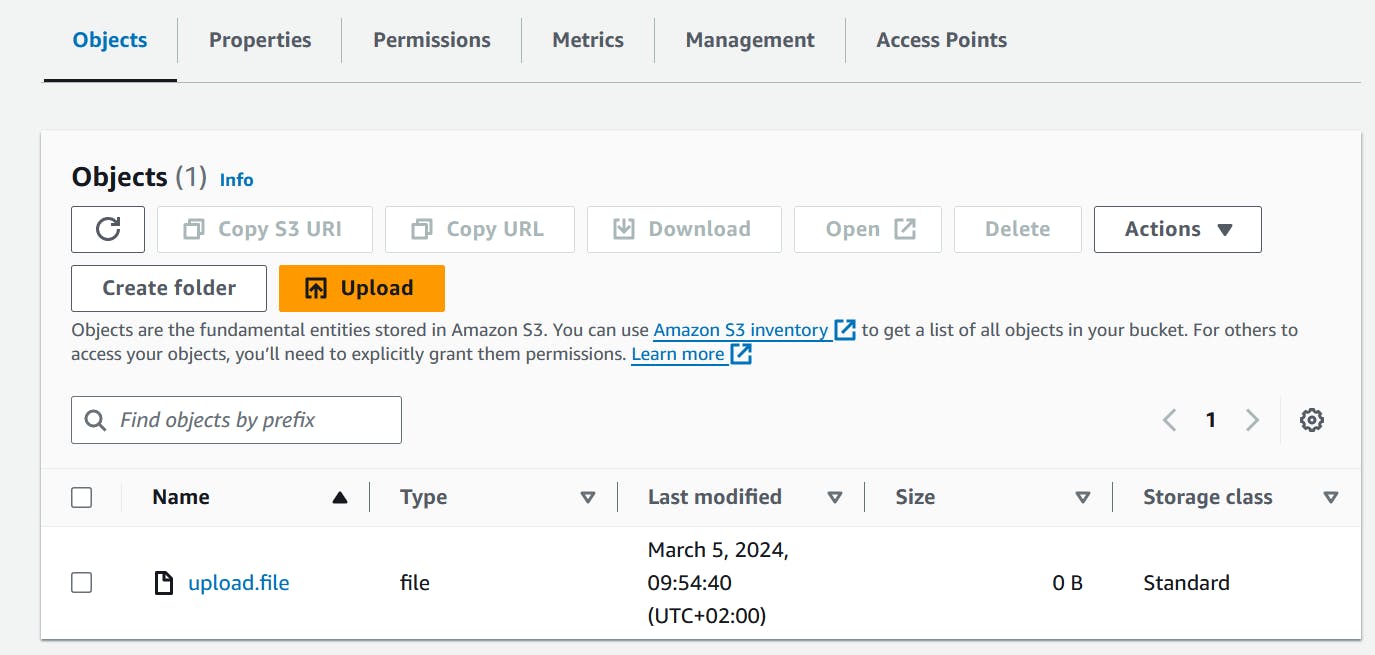

Uploading from ec2-1

Login into ec2-1 via AWS Console as the previous step.

Create a Dummy file

touch upload.file

- use the below command to upload

upload.filefile.

aws s3api put-object --bucket eraki-s3-dev-01 --key upload.file --body upload.file --profile Dave

The --body parameter in the command identifies the source file to upload. For example, if the file is in the root of the C: drive on a Windows machine, you specify c:\upload.file. The --key parameter provides the key name for the object on the S3 Bucket.

Check the S3 Bucket on the AWS console, Should be uploaded.

Downloading to ec2-2

Login into ec2-2 machine as the previous step.

use the following command to download the file

aws s3api get-object --bucket eraki-s3-dev-01 --key upload.file upload.file --profile Dave

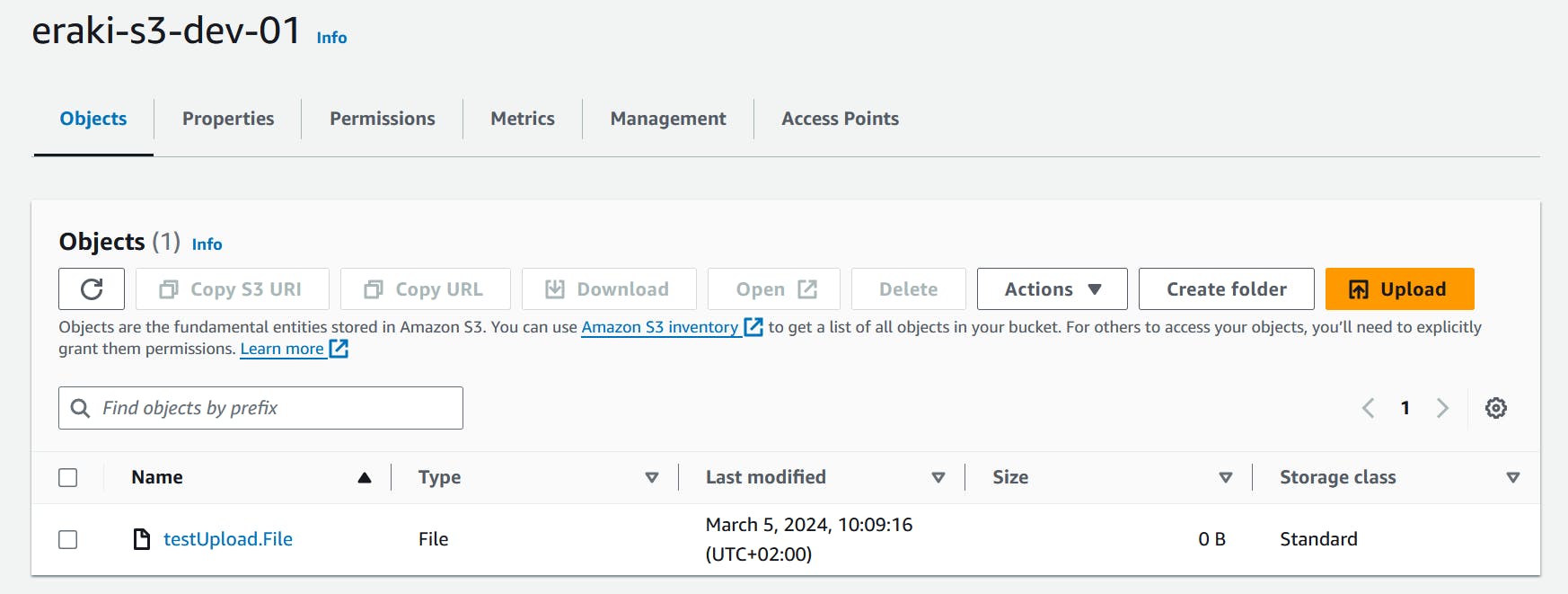

Using Python script

Uploading from ec2-1

Login into ec2-1 using the endpoint as we discovered before here.

Create a dummy file called

upload.fileCreate a python script as following

vim upload.py

- Past the below content:

import boto3

session = boto3.Session(profile_name="Dave") # Define the profile session

s3_client = session.client('s3') # use the profile session

object_name = "testUpload.File" # change the name on the S3 Bucket

bucket_name = "eraki-s3-dev-01"

file_path = "/home/ec2-user/upload.file"

try:

object = s3_client.upload_file(file_path, bucket_name, object_name)

print(f"File '{file_path}' uploaded successfully to S3 bucket '{bucket_name}' / '{object_name}' !")

except Exception as e:

print(f"Error uploading file: {e}")

- Adjust the script permissions

chmod 775 upload.py

- Install prerequisites:

# check python version

python3 --version

Python 3.9.16

# install pip3

curl https://bootstrap.pypa.io/get-pip.py -o get-pip.py && python3 get-pip.py

# install boto3 library

pip3 install boto3

- Run the script

python3 upload.py

- Check S3 Console

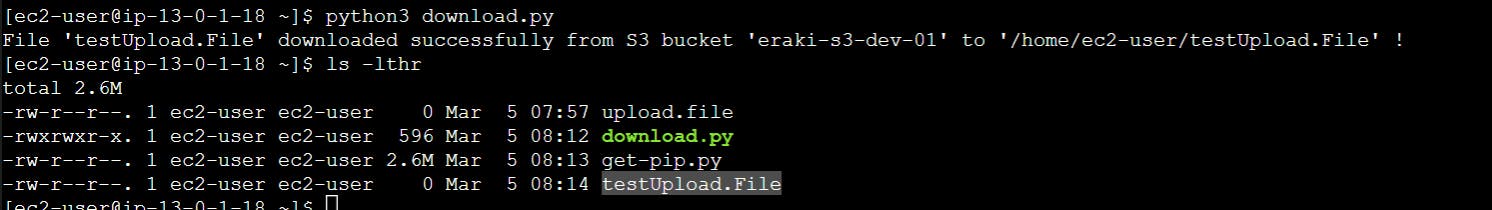

Downloading to ec2-2

Login into the ec2-2 as the previous step

Create a python script as following

vim download.py

- Past the following content

import boto3

session = boto3.Session(profile_name="Dave") # Define the profile session

s3_client = session.client('s3')

object_name = "testUpload.File" # The name of the file in the S3 bucket you want to download

bucket_name = "eraki-s3-dev-01"

download_path = "/home/ec2-user/testUpload.File" # Local path to save the downloaded file

try:

s3_client.download_file(bucket_name, object_name, download_path)

print(f"File '{object_name}' downloaded successfully from S3 bucket '{bucket_name}' to '{download_path}' !")

except Exception as e:

print(f"Error downloading file: {e}")

- Adjust the script permissions

chmod 775 download.py

- Install the prerequisites

# check python version

python3 --version

Python 3.9.16

# install pip3

curl https://bootstrap.pypa.io/get-pip.py -o get-pip.py && python3 get-pip.py

# install boto3 library

pip3 install boto3

- Run the script as following

python3 download.py

Destroy environment

The Destroy using terraform is very simple, However we should first destroy the resources that have been created manually, Follow the steps below.

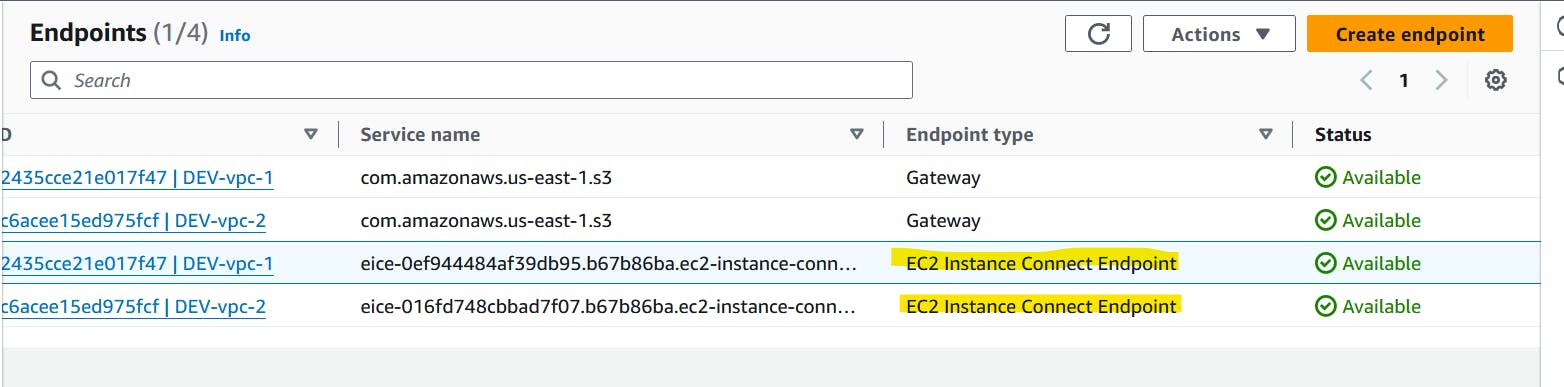

Delete the VPC endpoint (Manual resource)

open-up VPC console, and locate endpoints under Virtual private cloud section

Delete the EC2 Instance Connect Endpoints

Delete the Access key

- open-up the IAM console, locate Dave user, specify Security credentials tab, Then Delete Access key

Destroy environment using Terraform

Once ensure The EC2 instance connect endpoints have been deleted submit the following command

terraform destroy -var-file="terraform-dev.tfvars"

Conclusion

By integrating With S3, organizations can gain a number of benefits, including improved data security, simplified data management, and increased operational efficiency. S3 provides a secure and scalable storage solution for MFT files, Besides the AWSCLI s3api command and boto3 aws library for managing file streaming.

That's it, Very straightforward, very fast🚀. Hope this article inspired you and will appreciate your feedback. Thank you.